PluralSight – Extracting Structured Data from the Web Using Scrapy-full

English | Size: 348.02 MB

Category: Tutorial

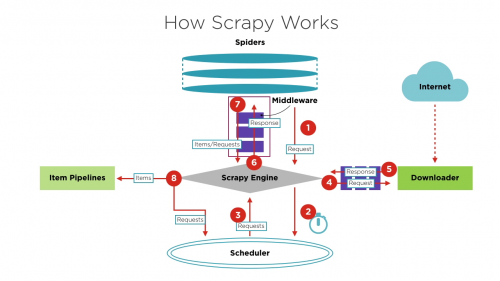

Websites contain meaningful information which can drive decisions within your organization. The Scrapy package in Python makes crawling websites to scrape structured content easy and intuitive and at the same time allows crawling to scale to hundreds of thousands of websites. In this course, Extracting Structured Data from the Web Using Scrapy, you will learn how you can scrape raw content from web pages and save them for later use in a structured and meaningful format. You will start off by exploring how Scrapy works and how you can use CSS and XPath selectors in Scrapy to select the relevant portions of any website. You’ll use the Scrapy command shell to prototype the selectors you want to use when building Spiders. Next, you’ll see learn Spiders specify what to crawl, how to crawl, and how to process scraped data. You’ll also learn how you can take your Spiders to the cloud using the Scrapy Cloud. The cloud platform offers advanced scraping functionality including a cutting-edge tool called Portia with which you can build a Spider without writing a single line of code. At the end of this course, you will be able to build your own spiders and crawlers to extract insights from any website on the web. This course uses Scrapy version 1.5 and Python 3.

Contents:

– Course Overview

– Getting Started Scraping Web Sites Using Scrapy

– Using Spiders to Crawl Sites

– Building Crawlers Using Built-in Services in Scrapy

– Deploying Crawlers Using Scrapy Cloud

About the author

A problem solver at heart, Janani has a Masters degree from Stanford and worked for 7+ years at Google. She was one of the original engineers on Google Docs and holds 4 patents for its real-time collaborative editing framework.

DOWNLOAD:

Leave a Reply